Photo courtesy Paul Hanaoka on Unsplash

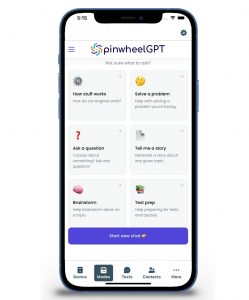

Chatbots are the talk of the tech town nowadays. It all started with ChatGPT earlier this year and now includes everything from Microsoft’s AI enhanced Bing to Google’s Bard. Chatbots offer assistance with everything from content creation to coding, booking vacations, researching projects, and more. The larger implications of chatbots and AI remains to be seen. Supporters laud the benefits in efficiencies while naysayers fear the threat to creative and assistive professions. One innovation in this space, however, stands out among the pack: PinwheelGPT is an AI chatbot for kids.

Developed in partnership with ChatGPT, PinwheelGPT offers child-safe, age-appropriate responses using artificial intelligence (AI) with parental monitoring. Kids aged 7-12 can ask questions they have on their minds and the results will be tailored to them, without complex or advanced vocabulary nor explicit content. There won’t even be links or images.

The idea is to help kids become more informed and educated on topics with which they are curious. The company says parents can use a child’s inquiries, which can be monitored from a parent dashboard, to engage in conversation with their kids.

Do We Need a Chat Bot for Kids? The Good

PinwheelGPT basically takes the idea of a voice assistant like Alexa or Google Assistant (Amazon indeed even makes Echo smart speakers for kids) to another level by adding a more detailed and conversational spin. This also helps familiarize the younger generation with technology that will be integral to their careers in the future.

PinwheelGPT is available free for any platform (iOS, Google), not just for Pinwheel phones.

I tried it out, first creating a parent account, then an account for my son, then logging out and logging back in as him to use it as he might. Tap “Start new chat” just like you would with a chatbot like ChatGPT and ask the desired question. I asked, “what are good novels (non-graphic) for 12-year-old boys?” I was given a list of five, including Harry Potter and Percy Jackson titles (which my son has already read) along with a few others. I decided to use the conversational aspect and replied, “I have already read these. Do you have any other suggestions? I like books like Wings of Fire.” It took just a few seconds for the AI to deliver five more book suggestions with titles that are like the Wings of Fire books. The suggestions were great, with several of them books that he has read and loves as well.

I then decided to try something more intense and asked, “there’s a boy bullying me in school. What should I do?” The response was as follows:

“I’m really sorry to hear that you’re dealing with a bullying situation in school. It’s important to take action and address this issue. Here’s what you can do: 1. Talk to someone you trust: Share your feelings with a trusted adult, such as a parent, teacher, or school counselor. They can provide guidance and support. 2. Stay calm and confident: Bullies often look for reactions, so try your best to stay calm and ignore their hurtful behavior. Remember that their actions say more about them than they do about you. 3. Be assertive: Stand up for yourself by calmly telling the bully to stop or by using assertive body language. Avoid responding with aggression or becoming physical, as that can escalate the situation. 4. Buddy up: Stick with friends or find a buddy to walk with, as there is safety in numbers. Bullies are less likely to target someone surrounded by others. 5. Report the bullying: Inform a teacher, principal, or another trusted adult about what’s happening. Provide details such as when and where the bullying occurred. Schools have policies in place to address bullying and can take appropriate action. Remember, you’re not alone, and it’s important to seek help. Your well-being is a priority.”

This is all great feedback.

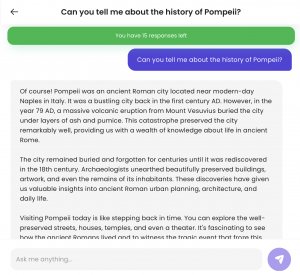

Finally, I wanted to test historical knowledge. Since we’re about to embark on a family trip to Rome, Italy, I asked something my son might ask: “Can you tell me about the history of Pompeii?” The reply was detailed and informative. The information provided was factual, accurate, and provided a good synopsis of what happened in 79AD, the resulting fallout, and what Pompeii is like today.

Finally, I wanted to test historical knowledge. Since we’re about to embark on a family trip to Rome, Italy, I asked something my son might ask: “Can you tell me about the history of Pompeii?” The reply was detailed and informative. The information provided was factual, accurate, and provided a good synopsis of what happened in 79AD, the resulting fallout, and what Pompeii is like today.

I logged out and logged back into the parent side, which gave me a summary of “his” conversation, replicated exactly as he saw it. I could see both the questions asked along with the answers he received.

Do We Need a Chat Bot for Kids? The Bad

I’ve explored the positive side of a chatbot for kids, but as with any technology, there can also be an ugly side.

A chatbot for kids raises questions about the importance of relying on technology as the third parent. The logical answer for a child who has a question is to ask their parent or other authority figure, like a teacher. Asking an artificial intelligence seems like an unsafe, unreliable route to go, even if parents can step in should they not be happy with a response or are concerned about the questions their kids are asking.

From an educational standpoint, it removes the middleman, allowing kids to research projects without parents having to embarrassingly Google something because they don’t know the answer. But the response to my aforementioned Pompeii question raises an interesting concern: kids might try to copy PinwheelGPT details verbatim for schoolwork (which admittedly could be done with regular chatbots as well and is already becoming a growing problem in schools.) The fact that the replies from PinwheelGPT are written with simpler vocabulary and without complex words, however, makes it even easier for kids to copy/paste and present as their own versus using ChatGPT replies that aren’t convincing as being written by a 13-year-old.

A service like PinwheelGPT could theoretically open doors to conversation. Maybe parents aren’t aware that their kids are curious about things like sex, drugs, alcohol, or even topics like bullying, weight loss, or external beauty. A child might be more comfortable asking a chatbot such questions at which point parents can step in and have important conversations face-to-face.

But would a child even ask these important, embarrassing questions if (or once) they know their parents can see what they’re asking? The idea of anonymity and being private goes out the door at this point along with a sense of trust.

What’s more, parents should be encouraging children to speak more openly to them versus resorting to an AI. It’s already a growing problem that kids are “learning” and “researching” topics on social media, getting incorrect and even potentially damaging information. Promoting a healthy and open dialogue with trusted sources in person is crucial. While there’s a lot of technology that can help in this respect, chatbots for kids seems like a step too far.

Dane Witbeck, CEO and Founder of Pinwheel, says PinwheelGPT is a “fun and educational way for today’s kids to get in on the exciting power of potential of ChatGPT.” The company cites Common Sense Media data that indicates kids are already experimenting with ChatGPT. The idea is that since they are doing it anyway, why not guide them towards a safer, more age-appropriate version?

If used correctly, this point has plenty of validity. But the very fact that a parent might say, “here’s an AI just for you to use to ask questions” suggests to a child that the parents themselves don’t want to answer them.

There’s another factor that comes into play as well: cost. Kids can use PinwheelGPT to ask up to 20 questions for free per month (about an hour of Q&A monthly, PinwheelGPT says), but parents need to pay $20/mo. or $80/yr. for kids to get unlimited responses.

Would You Use PinwheelGPT?

The danger with chatbots and kids, overall, is that the more comfortable kids get engaging in conversation with non-humans, something they already do on a large-scale by using social media, the less comfortable they will be engaging in conversation with real ones.

Chatbots present opportunities for streamlining business processes, eliminating mundane, repetitive work, and creating greater efficiencies. They also pose real dangers to the economy, job market, and creative professionals (just ask everyone currently participating in the Hollywood Writer’s Strike). Nonetheless, to push back against a technology that is inevitably going to become part of our daily lives would be unwise. Kids should be educated on chatbots, how to use them, their value and downfalls. But more importantly, kids need to learn those all-important soft skills, too. Without the soft skills that non-technology-based interaction provides, kids will have difficulty succeeding in any career path they want to pursue. The more we send them to digital devices to find answers, companionship, education, and now even conversation, the less comfortable they’ll be using anything but.

Bottom line: parents, whether you let your kids use chatbots or not, constantly reinforce to them that they can ask you anything, and that you’ll take the time to answer it. You don’t have the same level of knowledge and information processing power as a chatbot. But what you can do is work with your child to help them find the answer they seek using reputable sources. Doing so will help you form the types of bonds that a chatbot will never, and should never, have with a young, developing mind.

-30-

More on chatGPT