Less than a year after it introduced tools to label content generated with artificial intelligence, social media and video sharing site TikTok is now automatically identifying AIGC (AI-generated content) made on other platforms.

TikTok, as are other online platforms, is looking to help people identify what’s real and what isn’t on its site and spot disinformation and AIGC posted there. It’s one way to build trust, respect and a certain credibility in a media marketspace already dangerously corrupted with mis-, dis- and artificially created information. It’s part of a big push for digital watermarking and labeling of AI-generated content, particularly in election years but certainly now more than ever in any case. Google said last year that AI labels are coming to YouTube and its other platforms. In February Meta said it will make it easier to identify images and eventually video and audio generated by artificial intelligence tools using “Made with AI” labels.

In addition to its labelling scheme, TikTok has also launched media literacy campaigns developed in partnership with leading online safety and identity protection experts in the U.S. and Canada.

“AI enables incredible creative opportunities, but [it] can confuse or mislead viewers if they don’t know content was AI-generated,” the company said when announcing the new practice. “Labeling helps make that context clear—which is why we label AIGC made with TikTok AI effects and have required creators to label realistic AIGC for over a year.”

“AI enables incredible creative opportunities, but [it] can confuse or mislead viewers if they don’t know content was AI-generated,” the company said when announcing the new practice. “Labeling helps make that context clear—which is why we label AIGC made with TikTok AI effects and have required creators to label realistic AIGC for over a year.”

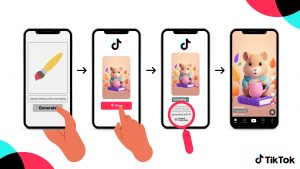

It was back in 2023 when TikTok announced policies and procedures regarding AI content on its site, and it introduced labeling mechanisms for its members and content creators. Now it’s implementing what it calls Content Credentials, and it will use the new technology to start automatically labelling AI-generated content that’s made on other platforms.

Developed with the Coalition for Content Provenance and Authenticity (C2PA), the technical aspects of the Content Credentials architecture present a comprehensive model for storing and accessing cryptographically verifiable information that can be used by publishers and consumers when determining the authenticity of media. Content Credentials attach metadata to content, which can be used to instantly recognize and label AIGC. TikTok rolled out the capability for images and videos first; labelling for audio-only content will follows.

Content Credentials remain attached to TikTok content when downloaded, so anyone can make use of C2PA’s Verify tool to help identify AIGC that was made on TikTok and even learn when, where and how the content was made or edited. Other platforms that adopt Content Credentials will be able to automatically label it.

TikTok says it also consulted with its Safety Advisory Council membership to develop its AI ‘warning’ labels for AIGC, and it spoke with leading experts from industry and academia who study just how online video viewers respond to different kinds of AI labels.

TikTok says it also consulted with its Safety Advisory Council membership to develop its AI ‘warning’ labels for AIGC, and it spoke with leading experts from industry and academia who study just how online video viewers respond to different kinds of AI labels.

The most effective labelling technique should empower good decision-making by content creators and consumers alike: phrases and labels such as “AI Generated,” “Generated with an AI tool,” and “AI manipulated” clearly flag AI-generated content, regardless of its accuracy or integrity; content tagged with phrases like “Deepfake”, “Artificial” and “Manipulated” meanwhile can be seen as mis- leading content, whether AI was used or not.

In all cases, content production, implementation, and objectives should be clearly disclosed so viewers can make the best decisions about what they are seeing, hearing, or learning.

To further help people spot AIGC and misinformation, the media literacy campaigns launched by TikTok, developed with guidance from experts including Mediawise in the U.S. and MediaSmarts here in Canada, with more tips and techniques to help viewers distinguish between fact and fiction in the content they consume online.

MediaSmarts, the Canadian charitable organization for digital media literacy, is serving up an online safety resource for parents, guardians, and caregivers to help their teens safely navigate various online spaces. Designed by designed by MediaSmarts in collaboration with TikTok, Talking TikTok: A Family Guide has practical advice to help create a positive app environment where the well-being of youth on the platform is a priority for all involved. Media Smarts has authored many other educational resources, also available on its website, led research projects, and contributed to global initiatives surrounding digital media literacy, safety, privacy and security.

In a related move, TikTok is joining the Adobe-led Content Authenticity Initiative (CAI) to help drive Content Credentials adoption on its platform and the wider online space. “At a time when any digital content can be altered, it is essential to provide ways for the public to discern what is true,” said Adobe General Counsel and Chief Trust Officer, Dana Rao. “Today’s announcement (by Adobe and TikTok) is a critical step towards achieving that outcome.”

Taking these first step means the increase in auto-labelled AIGC on TikTok may be gradual at first, as it needs to have the Content Credentials metadata in place to identify and label it. However, as other platforms also implement the standards, more content can be labelled. As mentioned, various social media platforms are taking steps to flag, tag or otherwise label content created with artificial intelligence.

Many are asking, of course, why stop at content in personal social media posts?

What about corporate digital marketing and advertising? Public relations. Hollywood movies. Election campaigns. Adult content. And news and information of a more general sense.

We use – and need – labels and warnings and tags and flags for all sort of content, not just the artificially intelligent kind. It was the Theatres and Cinematographs Act of 1911 that first made use of labels and categories – and censorship – to rate films in Ontario (classifications are a provincial matter in this country); government regulations may not be popular, but they can be more effective than the ones business puts on itself.

-30-