If you wondered why AI, big data analysis, and other high-tech tools are all the rage these days, some would say, “It’s the plains wanderer, of course!”

Artificial intelligence and high-tech digital devices like bioacoustics recorders, hydrophones, and remote sensing devices are given credit for helping rediscover the critically endangered bird in Australia (none had been seen – or heard – in nearly 30 years!)

Finding the wanderer (a small but colourful grassland-dwelling bird) is just one example of how technology and cloud-based data repositories are being used to monitor, measure, and maintain other endangered animal populations, here in Canada and around the world.

Knowing where animals of interest live (and how many are living there) helps inform decisions about land preservation and animal protection. In the past, information about animal population and location was often collected using rather harsh, intrusive catch-and-release strategies; now just listening can work.

A composite image shows an ʻAnianiau, a small honeycreeper, and an audio device measuring decibels. Perch supplied image.

In one of the most recently announced examples, Google DeepMind released an updated AI model trained on wildlife sounds, called Perch.

First unveiled some two years ago, the AI model was designed to help researchers analyze bioacoustics data, mostly recorded bird sounds, to aid in species identification and boost conservation efforts.

It’s not just for the birds now, however.

With Perch 2.0, the sounds and vocalizations of amphibians, insects, mammals, and more have been added. Even anthropogenic sounds (noises made by human activity, machinery, and the like) have been added, nearly doubling the database’s original size to thousands (some say millions) of hours of audio data.

Audio data captures and conveys analog sound waves in a digital format. Information about sound characteristics, such as frequency (high or low pitch) and amplitude (loudness or volume), is converted into bits and bytes from recorded and sampled audio. Analysis of such data can be applied to speech recognition and therapy, music theory, and environmental assessments.

The fact that Perch can be used to predict and identify which animal species are present in a given environment by listening to an audio recording is only part of the value proposition: the Perch development team (see caption below) has also provided online tools so other researchers can label and classify sounds with just a single example, and to monitor species for which there is very little existing training data. Even juvenile bird calls (which are often quite different from the calls of adults of the species) can be specified.

Dr. Daniella Teixeira places an acoustic monitoring device in an Australian forest. Audio data from devices like these are collected into online databases and conservation tools. The research and development team at Perch includes Bart van Merriënboer, Jenny Hamer, Vincent Dumoulin, Lauren Harrell, and Tom Denton, and Otilia Stretcu from Google Research. Collaborators at the University of Hawaiʻi include Amanda Navine and Pat Hart, as well as Holger Klinck, Stefan Kahl and the BirdNet team at the Cornell Lab of Ornithology. Perch supplied image.

Given just one example of a sound, a vector search with Perch will brings results of the most similar sounds in a dataset. Vector searches, powered by machine learning algorithms, can find results across different content types like text, images, and audio, even if the keywords don’t exactly match. The search results can then be marked as relevant or irrelevant.

Interestingly, the new Perch model also uses audio data and recorded material from public sources like Xeno-Canto and iNaturalist, which both hold a wealth of accessible materials.

iNaturalist, for example, is a citizen science platform and sound dataset with more than 230,000 audio files from some 5,500 species, contributed by more than 27,000 recordists worldwide. The dataset encompasses sounds from birds, mammals, insects, reptiles, and amphibians, with audio and species labels derived from observers’ reports.

With such resources openly available, other researchers can develop their own AI models for specific purposes, which many Canadian bioresearchers (and bird fanciers) have done.

HawkEars is one example: it’s a deep learning AI tool developed specifically for CanCon purposes: to recognize the call of specific Canadian bird and amphibian species.

Some of the hundreds of thousands of training samples used by HawkEars come from open sources like iNaturalist, the Hamilton (Ontario) Bioacoustics Field Recordings, as well as Google, but HawkEars takes a regional approach to narrow its focus, boost data quality, and enhance label specificity.

HawkEars was developed by computer scientist Jan Huus, and Dr. Erin Bayne from the University of Alberta, with support from the Canadian not-for-profit biodiversity firm, Biodiversity Pathways, and an organization called the Alberta Biodiversity Monitoring Institute (ABMI).

ABMI’s Bioacoustic Unit and its partners at the University are an invaluable resource for wildlife audio recording best practices. ABMI image.

ABMI monitors and reports on biodiversity status throughout its home province, and it also makes an open data platform available to researchers. Raw data, derived data products, mapping information, and more is available from ABMI, Biodiversity Pathways, and Wildtrax, a dedicated online platform for storing, managing, processing, and sharing environmental sensor data.

ABMI’s Bioacoustic Unit and its partners at the University are also an invaluable resource for wildlife audio recording best practices. In addition to providing actual bioacoustics services (recording in the field), data analysis, categorization, and result reporting are also provided.

Thousands of Wildlife Acoustics Song Meters (SM4 model shown) record automatically and autonomously. ABMI image.

ABMI uses hundreds of autonomous recording units (ARUs) to remotely survey birds and amphibian species each summer.

The Wildlife Acoustics Song Meters SM3 and SM4 models record autonomously for long periods of time, and an upgraded model, the SM4TS, uses GPS and clock synchronization to help researchers triangulate a vocalizing animal’s specific location. Thousands of recording devices are used to gather the sounds of nature in remote regions across the country, resulting in millions of hours of audio documenting birds and amphibians here.

Other organizations are singing from the same book: Biodiversity Pathways has joined with the Boreal Avian Modelling Project (BAM) to develop and disseminate reliable, data-driven, and model-based science products to support migratory bird management and conservation right across the boreal region of North America.

For over 20 years, BAM operated as a collaborative, multi-institutional academic project through the University of Alberta and l’Université Laval; beginning earlier this year, it found a new home with Biodiversity Pathways.

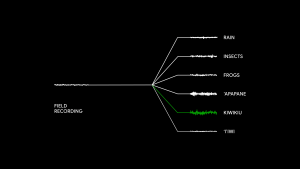

This illustration of a mixture of audio from different species and environmental factors demonstrates how a single field recording can contain numerous sources of sound — both vocalizations from several animal species as well as natural sounds like rain or wind. Perch supplied image.

The BAM database was created by collating and harmonizing avian data gathered across Canada and the U.S. Organizers say that through enhanced data sharing, avian research and bird conservation can accelerate.

One recent example coming out of all the research and data collection is the State of Canada’s Birds report, the published result of a partnership between the federal government and avian advocates organizations.

Birds Canada, and Environment and Climate Change Canada, based the report on 50 years of bird monitoring data, much of it collected by citizen scientists. The report cites an overall crisis facing bird populations, mentions several threats to native and migratory birds, as well as listing several possible remedies and solutions.

While the tools and technologies that we use to gather wildlife data have changed dramatically over the years, from binoculars to artificial intelligence, the goal is very much they same.

It’s for the birds. The crickets. The frogs. And all of us.

# # #

-30-