![]() By Gadjo Cardenas Sevilla

By Gadjo Cardenas Sevilla

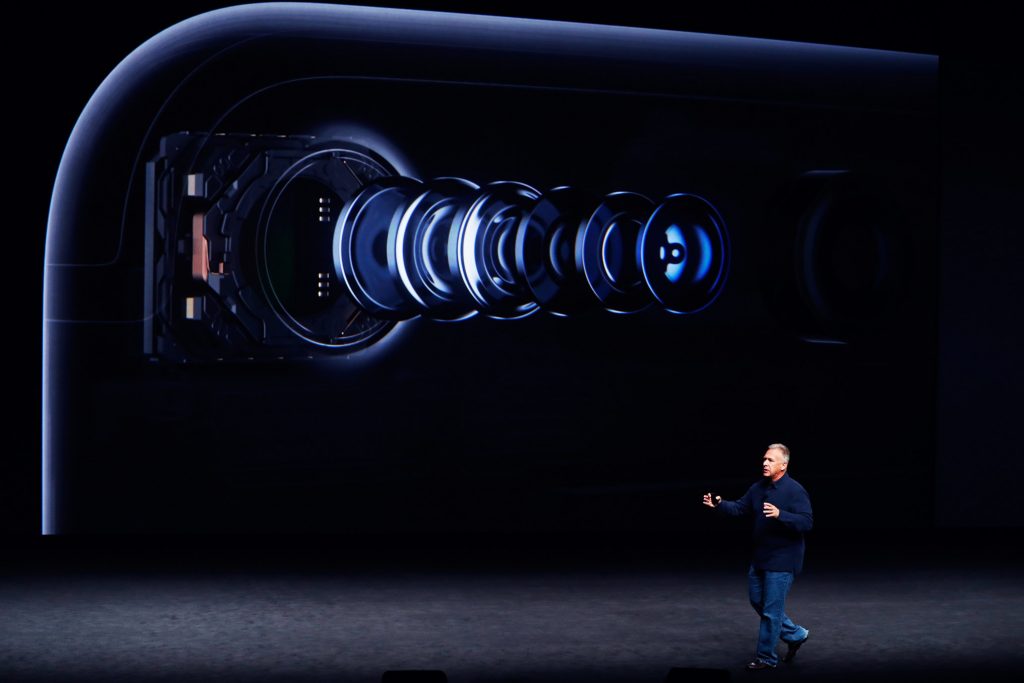

Sensors and glass may still determine the quality of photos and videos today, but that is changing dramatically thanks to computational photography. Computational photography uses powerful computers, the cloud, as well as the wizardry of technology to shoot and output content like never before.

Computational photography relies more on sensors, image processors as well as algorithms to create accurate and attractive photos and videos.

An iPhone’s camera makes 1 billion calculations per second to help create an accurate and stunning photograph. This includes compensating for the lighting conditions, determining depth of field, calibrating the colour cast, and at times taking multiple photos quickly to merge them into the best possible version. How serious is Apple about their camera’s capabilities? 800 engineers were said to have worked on the iPhone 6S camera to ensure that it could shoot above average photos and video.

Compare this to the way traditional cameras need to be manually set for ideal shooting conditions and it is like having a master photographer inside your smartphone.

Instead of taking one photo when you press the shutter button, Google’s Pixel smartphone takes several images on a low-resolution sensor. These images are merged to create a high resolution image that omits the bad parts of the photos and enhances the good parts.

The Google Pixel does an even more impressive job with video. It can digitally compensate for shaky video and it can also create photos in poorly lit condition using the automatic HDR+ (High Dynamic Range) mode, which intersperses multiple photos to create the best composite.

Google’s head of computational photography Marc Levoy says, “mathematically speaking, take a picture of a shadowed area — it’s got the right colour, it’s just very noisy because not many photons landed in those pixels,” says Levoy. “But the way the mathematics works, if I take nine shots, the noise will go down by a factor of three — by the square root of the number of shots that I take. And so just taking more shots will make that shot look fine. Maybe it’s still dark, maybe I want to boost it with tone mapping, but it won’t be noisy.”

We can thank smartphones, or maybe the fact that people choose to use these devices as their primary cameras, for the upshot in computational photography. Limited by thin enclosures, smartphones can’t really add larger camera sensors or lenses to get truly great photographs and videos in all types of conditions.

Smartphones have pushed photography towards innovation because taking pictures (and sharing them) is one of the key features of today’s smartphones. The proof of this is the popularity of apps like Facebook, Instagram, Snap and many others for which photos are the key feature.

![]()

It’s no surprise that computational photography solutions are creeping back to standalone cameras and lenses. They may not replace innovations in sensors, lenses and camera bodies, but they are certainly useful in supplementing hardware to help make consistently good photos.