The tech industry is looking beyond machine learning and artificial intelligence for new capabilities using what’s known as computer vision.

Some are saying it will turn today’s already powerful computers into tomorrow’s “machine superpowers.”

From the driver’s seat of an autonomous vehicle to the treatment of congenital human diseases and much, much more, new machines with human-like perception and the smarts to make sense of what they see will have an incredible impact on our life.

But embedding a computational device with the cognitive ability to identify and interact with objects (and their characteristics such as shape, weight, texture, form, and density) is a big step. Getting that device to understand the context – not just the content – of what it sees or detects in the physical environment is another step altogether, one with enormous potential.

That potential stretches from identifying poorly-made products on an assembly line to identifying pedestrian travel patterns and the routes they take to and from work or school. It can include the ability to better identify products on the grocery store check-out counter or the ability to more quickly identify malignant tumors inside the human body.

“Computer vision is one of the most remarkable things to come out of the deep learning and artificial intelligence world,” says SAS data scientist Wayne Thompson. “The advancements that deep learning has contributed to the computer vision field have really set this field apart.”

SAS’s software tools are used in data management, advanced analytics, business intelligence, criminal investigations, and predictive analytics.

Intel is another major IT company involved with computer vision, exploring the value in applications such as object and facial recognition, motion tracking, quality control and inspection for manufacturing processes, and advanced audience/demographic analysis.

Intel’s CPUs and VPUs (vision processing units), when combined with high-resolution video cameras, edge- or cloud-based computing nets, and artificial intelligence (AI) software, could enable computer vision systems to see and identify objects in a way that approximates human perception.

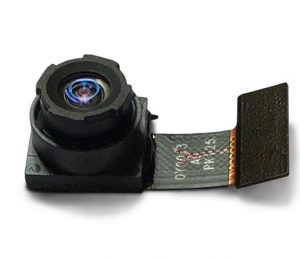

A Canadian tech firm has released computer vision tools, including a software development kit and a humanoid robot. Immervision image.

That’s a big challenge because (no matter what you many think of our collective “vision” these days) our individual perception is driven by more than 120 million light detecting receptors or cones in the retinas of our eyes, along with a visual processing system made up of literally hundreds of millions of neurons.

Yes, our eyes are crucial. But it’s our brain that does most of our “seeing”, and that’s the challenge faced by computer vision system designers and engineers.

In its support for meeting that challenge and its desire to build the next generation of intelligent vision systems for a wide range of industrial uses, Canadian tech firm Immervision has released computer vision tools, including a software development kit and a humanoid robot!

Named JOYCE, and called “the first humanoid robot developed by the computer vision community to help machines gain human-like perception and beyond”, the company describes her purpose is to support computer vision technology development.

As a way to gain a better understanding of her environment and to build her capabilities by upgrading her optics, sensors, and AI algorithms, Immervision also released the JOYCE Development Kit for engineers and AI developers.

“At Immervision, we strongly believe in the value of bringing together the computer vision community to break down the silos that are slowing the innovation cycle. Instead, let’s push forward the boundaries of machine perception through cross-pollination. We believe that JOYCE will help develop extremely innovative solutions to resolve complex industry challenges,” said Pascale Nini, President and CEO of Immervision.

Immersion develops and manufactures a wide range of wide-angle, 360-degree and panomorph lenses. Immervision image.

The development kit comes with three ultra-wide-angle panomorph cameras calibrated to give 2D, 3D stereoscopic or full 360 x 360 spherical capture and viewing of the environment. It uses Data-In-Picture technology so that each video frame Joyce sees can be enriched with data from a wide array of sensors providing contextual information that helps increase her visual perception.

Once that’s done, potential uses for a CV-enabled robot include enhancing the performance of smart home devices such as vacuum cleaners, lighting systems, and home appliances; improve a first responder’s or firefighter’s ability to detect people and objects in an emergency, such as through smoke; or enhance medical diagnostics to better identify cancerous growth or other conditions on a CT scan.

Immervision says we can all get a look at this humanoid robot — and see through her eyes — when live streaming is enabled and available.

‘Til then here’s the preview clip.

A Canadian tech company has unveiled “the first humanoid robot.”

# # #-30-