Although Facebook has come to dominate much of our socially mediated world, although much of the news has been filled with stories (lately, mostly negative) about this massively successful global enterprise, although Facebook still counts some three billion active users and 86 billion dollars in revenue (last year’s figures, USD), you may not be hearing that name much longer!

Facebook is planning to change its name.

According to sources, CEO Mark Zuckerberg will reveal the new company moniker at the upcoming Facebook Connect virtual conference on October 28, if not before. It may that the company’s stated intention to build the ‘metaverse’ is triggering a rebranding. It may be that all those stories about the negative impacts of social media in general − and Facebook in particular − is reason enough for the change.

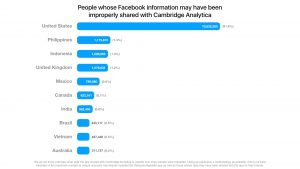

The Cambridge Analytica scandal seems like old news: that data was “harvested” back in 2015.

In many ways, those stories and that change have been building for years. The Cambridge Analytica scandal, in which the private information of millions of Facebook users was breached and the company was fined for failing to observe data protection laws as a result, already seems like old news: that data was “harvested” back in 2015.

But the precedent for that egregious kind of privacy violation had long been established! And so the question ‘Would you continue to call someone your BFF if they acted the way social networks do?’ came up years ago!

In a complaint filed with the Privacy Commissioner of Canada back in 2008, a key privacy concern was “the way Facebook shares the personal information of its users with third-party software developers who create games and quizzes and other apps that run on its network.”

Today, the big news comes from an in-depth investigative series recently published in The Wall Street Journal.

There have been five articles and four podcasts in the series, called The Facebook Files; they are direct, detailed and damning, but the core finding is short and straightforward: “Facebook knows, in acute detail, that its platforms are riddled with flaws that cause harm, often in ways only the company fully understands.”

Among the harms described: cyber-bullying, body shaming, self-doubt and suicidal thoughts affecting teen users, particularly girls; inequitable if not non-existent content moderation of false or misleading posted material, possibly leading to civil unrest and violence; undo influence on political activity through an algorithmic reward system that seeks greater engagement and advertising eyeballs but ends up boosting negativity and anger.

The accusations are underpinned by internal company documentation, including research reports, internal e-mails, and online discussions, even materials from staff presentations to senior company management.

Much of that revealed material came to light as Frances Haugen, a data science specialist in algorithmic product management who worked at Google, Pinterest and Yelp before joining Facebook a couple of years ago, identified herself as the previously anonymous complainant who reported concerns about Facebook to U.S. federal agencies.

She gave a much-publicized interview to 60 Minutes, underscoring her long-standing concerns that Facebook is misleading the public on its progress to fight and reduce hate speech, violence, and online misinformation.

But Mark Zuckerberg, Facebook founder and chief executive, disputed her position in a blog post: “At the heart of these accusations is this idea that we prioritise profit over safety and well-being.” He wrote that her analysis was “illogical,” and that it painted a “false picture of the company”.

However, the documentation Haugen provided is telling and unique, and she is not the only company insider to criticize the company. Another data scientist who raised alarm bells is Sophie Zhang, who said she found evidence of online political manipulation in countries such as Honduras and Azerbaijan. She appeared in front of a UK investigating committee earlier this week.

Will a similar situation play out here in Canada?

NDP MP Charlie Angus is among those calling on Ottawa to establish an independent digital watchdog to take on disinformation, hateful posts and a lack of algorithmic transparency. The watchdog could possibly call for and lead investigative committees. Angus also called for taxes on social media companies operating in Canada, among other Internet and information technology issues he commented on.

Before the election, the Liberal government had called for the overhaul of some Internet rules, but legislation aimed at regulating social media platforms and tackling online hate will need to be reintroduced in an upcoming session.

The government has said it wants to create a digital safety commissioner to enforce a new regime that targets child pornography, terr orist content, hate speech and other harmful posts on social media platforms. A proposed regulator could order social media companies to take down posts within 24 hours.

orist content, hate speech and other harmful posts on social media platforms. A proposed regulator could order social media companies to take down posts within 24 hours.

But certain provisions in the Criminal Code already make such activity illegal, so there is an opportunity to make use of what is already on the table, through stricter enforcement and equitable application. Likewise, existing legislation in the Competition Act presents the opportunity to use anti-trust proceedings to encourage competition where it is lacking (Facebook owns other popular social media platforms, such as WhatsApp and Instagram, as well as many other online and high-tech firms).

A more focused approach on the technical side of the issue, including greater transparency of algorithms and their function, is being proposed as a part of a campaign to make Facebook (or whatever it might be called) safer.

One Click Safer is one of several interventions being proposed at the Centre for Humane Technology, an independent group seeking to “re-imagine our digital infrastructure”.

Simply by changing the function of the reshare button, CHT maintains, the negative implications of engagement-based content ranking can start to be reined in. The arbitrary amplification of content through algorithmic manipulation is one of the most worrisome, if not negatively impactful, ways the platform can cause harm.

CHT, by the way, has its own interview with Facebook whistleblower Haugen; it is conducted in part by CHT founder Tristan Harris, himself a former high-tech executive who has also shared insights gathered while working in the high-tech world.

As Harris noted, the One Click Safer campaign is inspired by the work of systems analyst Donella Meadows, and her 12 Leverage Points to Intervene in a System.

CHT adapted her framework to show different intervention points and describe specific ways to make Facebook more humane — from technical platform changes and operational transparency improvements to external government regulation and development of new business models.

Of course, a few more blown whistles from the inside and revelatory news stories on the outside can keep pushing the company, if not the entire industry, in the right direction.

So, too, a large-scale migration of people in a direction away from certain platforms. While a three-billion-person boycott is almost inconceivable, a New York Times report indicates that social media usage is slipping among younger generations, again, referencing internal Facebook marketing research and related documents. And a new U.S. poll found as many as seven out of 10 Americans say social media platforms like Facebook and Twitter do more harm than good.

# # #

Facebook filter bubble. Original bubble © Trish. Used under Creative Commons license. CC-BY-NC-SA-2.0.

-30-