The Office of the Privacy Commissioner of Canada has launched an investigation into the company behind the artificial intelligence-powered chatbot ChatGPT.

The Canadian investigation into OpenAI, the operator of ChatGPT, was launched in response to a complaint alleging the collection, use and disclosure of personal information without consent.

A Canadian investigation into OpenAI, the operator of ChatGPT, has been launched in response to a complaint alleging the collection, use and disclosure of personal information without consent. CMSNewswire image.

The Commissioner’s action follows similar actions elsewhere: in a complaint made to the U.S. Federal Trade Commission, ChatGPT is said to be a risk to privacy and public safety. Germany and Italy seem headed towards official investigations (Italy has banned the app while a possible privacy breach is looked at) and the 25 other nations in the European Union are hammering out new laws to address artificial intelligence programs.

As the use of artificial intelligence-equipped tools proliferates worldwide, and as generative AI programs “create” more and more seemingly credible text, award-winning images, popular music and other digital content after being machine-trained with giant data pools and enormous supercomputing power, the onus is on legislators to more than keep up.

As Canadian Privacy Commissioner Philippe Dufresne said when announcing his investigation, “We need to keep up with – and stay ahead of – fast-moving technological advances, and that is one of my key focus areas as Commissioner. AI technology and its effects on privacy is a priority.”

ChatGPT and other generative AI programs are trained using huge amounts of data: ChatGPT’s capabilities were reportedly developed on a database of some 300 billion words. Pictures, voices, and other digitized data types can also be used

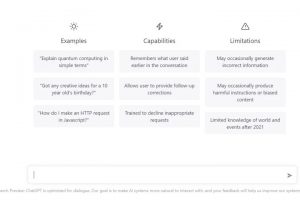

Potential questions, queries and prompts for text generating chatbot from OpenAI.

The chatty bot itself has referred to the “vast amount of text data that I have been trained on” but just where all the data comes from and how it is obtained is a concern.

It’s not known exactly, but some suspect the data is “scraped” off the Internet, collected from websites, chatboards, social media platforms and other online content sources. Such source material can contain personal information and it’s gathered without informed consent to its use. Generative AI systems have moved way beyond words, of course, so issues involving not just the protection of personal information, not only about informed consent, but also the possibly illegal use of copyright materials, are being raised.

All of which is to say we have seen this movie before. There have been investigations into data scraping before in this country and enforcement actions have been taken by privacy regulators here for that very reason.

Recall Clearview AI, the privately-owned American technology company that operated a facial recognition service built around an image collection numbering in the billions. That collection was filled in part with facial images scraped from popular social media sites like Twitter, Google, Facebook, and LinkedIn.

The company was asked to stop harvesting photos by each of the services and following a joint investigation by privacy regulators in Alberta, B.C., and Québec, it was found in violation of their privacy laws and ordered to stop collecting and sharing images.

Another factor the Privacy Commissioner may consider is the right of all Canadians, under PIPEDA, to access and have corrected if necessary personal information about them held by an organization. Once such data is incorporated into a large language learning model (LLM) like those used for OpenAI’s GPT-3.5 and GPT-4 training and reinforcement learning, it could be tough to get the information out or updated or even directly accessed.

Currently, none of the privacy laws in Canada specifically address artificial intelligence, but the Privacy Commissioner is pushing to fully apply PIPEDA, as it does require organizations to comply with rules regarding the collection, use or disclosure of personal information; reported amendments to Québec’s privacy laws coming in September will regulate AI’s use there somewhat.

Another component of keeping up and staying ahead of AI may be found in Canada’s Bill C-27, the Digital Charter Implementation Act. If passed, it will replace PIPEDA with the Consumer Privacy Protection Act (CPPA) and enact the new Artificial Intelligence and Data Act (AIDA).

(Ed’s note: a letter of support for AIDA legislation has been signed by dozens of Canadian tech analysts, researchers and participants in the field. It calls for greater protection for Canadians by ensuring AI systems are developed and deployed in a way that identifies, assesses, and mitigates the risks of harm and bias. It echoes similar sentiments in another open letter about AI and ChatGPT. Read on.)

That could apply not just to AI developers but any participant in the AI food chain, including companies that make use of platforms like Open AI’s GPT-4 to drive their services or applications. Proposals to require AI vendors to declare any use of material that is copyright or privacy protected and make them liable for possible misuse are being considered.

Adding to these legislative initiatives to reign in or put foul lines on AI development are the calls from a group of more than 30,000 tech industry notables, social and political commentators, lawyers, academics, legal researchers and concerned members of the general public, who said in their open letter that all AI labs should immediately pause the training of AI systems more powerful than GPT-4 for at least six months.

In the letter, organized by the non-profit Future of Life Institute and featuring well-known tech names like Steve Wozniak, Yuval Harari, Tristan Harris, and Elon Musk, they write there’s an “out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.”

Should be easy to stay ahead of that, right?

-30-