By Gadjo Cardenas Sevilla

Smarter smartphones mean more intuitive apps that can access each other’s features and data safely. Next generation applications will need to play nice with each other.

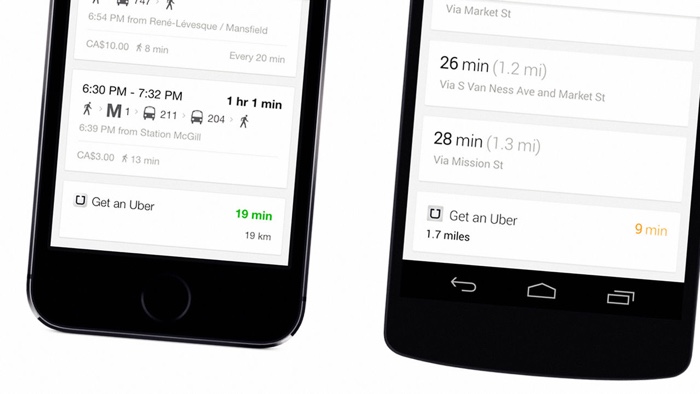

During a recent trip to London, England, I relied on Google Maps to help me navigate much of the city by foot as well as by public transport. I was also rather surprised and delighted to notice that aside from walking, driving and train options, there was also an Uber rental option complete with the time it would take me to get to where I needed to go.

Not only was this information available in realtime, I also got to see how much the Uber ride would cost me. Seeing that Uber would save me valuable time, I was able to invoke the service directly from Google Maps and my car arrived within five minutes as promised.

This type of mobile app interoperability is quite amazing because the OS now has access to other apps within apps. This means if it is smart enough to sense an option that makes sense to the user, it can quickly make this option available.

Of course, app interoperability is something new. We don’t even see it that much in PCs where you need to open various applications at the same time and usually cut and paste information yourself.

Of course, app interoperability is something new. We don’t even see it that much in PCs where you need to open various applications at the same time and usually cut and paste information yourself.

Google has been at the forefront of integrating app interoperability for some time now. One of the best examples is when you access a photo or video you have taken and decide you want to share it. You will see dozens of apps for sharing, editing, social media, mail, blogging, pinning, posting and more.

This is great for the user since they are one step away from moving to an app but within the framework of what they are currently doing.

Apple’s iOS is a bit more limited because they like to silo individual apps and their functionality for security purposes. Extensions are available but they aren’t as open as how Android handles it.

HealthKit, which is Apple’s iOS health tracking application can access data from various health apps and devices, it can securely file these to give the user an overview of their health picture as well as send off alerts if needed.

Is it possible that the future will no longer rest on individual apps to run independently but would instead be time and location-based. Like the Google Maps example above, which is based on location, time of day, and a list of options (i.e. walking, public transportation, driving or Uber).

Wouldn’t it be great if we didn’t need to hunt for apps for their functionality and these would just be available at a glance when we need them? Smart watch maker Pebble is already offering this on their Time based OS.

This means that as soon as apps are needed, that’s when they show up on screen. If you’ve subscribed to the Score sports app, once the Blue Jays have finished their game, the score and results will appear on screen. Same goes for notifications, calendar dates and other apps that intuitively appear when the time is right.

A natural, location and time based app system that focuses on functionality seems to flow more naturally than a purely app and notification-based system we have today.