Launched as a prototype barely two months ago, ChatGPT has exploded in popularity. And potential market value.

ChatGPT is a conversant chatbot, powered by artificial intelligence (AI). Pose a question or type a statement into its dialog box, and it will reply in what seems a literate, well-constructed and grammatically correct manner.

ChatGPT is a generative AI-based technology that interacts with users in a seemingly human way. It generates “conversations”, among other things. It was built upon very large language models and huge vocabulary data sets; both human moderated and machine learning techniques are incorporated into its development.

Observers say its capabilities mean the end of the college essay. Or the demise of code-writers. Or the death of journalism. Or the dawn of a new communications era. Or the end of the world as we know it. Certainly, it means an approximate $30 billion dollar evaluation of the research lab that developed it, a firm known as OpenAI.

A couple of weeks after OpenAI released Chat GPT last November, millions of users were trying out the app. The system hit user capacity rather quickly, and it can still suffer from overuse and blocked access.

Nevertheless, reports indicate OpenAI is in talks that would value the company at around $30 billion. Tech giant Microsoft seems ready to invest $10 billion itself (it already has an investment in OpenAI, having incorporated OpenAI’s digital image package, Dall-E 2, into several of its applications); either scenario makes OpenAI one of the most valuable start-ups on record despite generating little revenue.

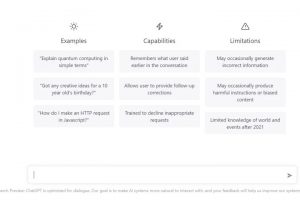

And despite limitations: ChatGPT can occasionally generate incorrect information (but it sounds authoritative when it does) and it admittedly has limited knowledge about world events since 2021.

Potential questions, queries and prompts for text generating chatbot from OpenAI. (Image: Screenshot of OpenAI ChatGPT)

OpenAI co-founder and CEO Sam Altman has acknowledged the shortcomings, noting on Twitter that ChatGPT is “incredibly limited, but good enough at some things to create a misleading impression of greatness.”

He’s described some of the technology underlying that impression, and he’s stated that each conversation on ChatGPT costs OpenAI several U.S. cents.

That’s allowed other tech observers, like Tom Goldstein at the University of Maryland’s Department of Computer Science, to calculate that OpenAI is spending about $3 million a month to keep its bot chatting.

Misleading impressions have big costs, and not only financial.

To access ChatGPT as a fully featured tool, an account must be created. Unfortunately, many will encounter a busy signal; trying to sign-up, we were told “ChatGPT is currently overloaded. Please check back later.”

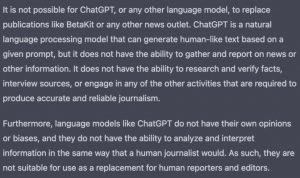

Text on screen generated by ChatGPT. (Image: Screenshot of OpenAI ChatGPT)

If you do get in, you may notice a lack of consent forms and privacy policies. That may be a concern to some people; it may be illegal in some jurisdictions.

But once you are signed up and allowed in, you can ask ChatGPT itself what it is and how it works. It will tell you: “As a large language model trained by OpenAI, I generate responses to text-based queries based on the vast amount of text data that I have been trained on. I do not have the ability to access external sources of information or interact with the [I]nternet, so all of the information that I provide is derived from the text data that I have been trained on.”

As such, the system does not “think” of original thoughts but uses its database and training to mimic human language and existing content of many types. It’s “greatness” stands on the shoulders of…well, all of us!

And while many say it is virtually impossible for people to detect AI-generated content, that’s exactly the challenge other developers have taken up. AI tools for AI detection seems a growing industry: at least one young entrepreneur, a college student, has written an app to detect whether text is written with AI. Edward Tian, a 22-year-old computer science student at Princeton, says it even detects essays written by ChatGPT.

He’s helping raise the bar, if so: A research study conducted at Northwestern University study found that the fake scientific abstracts reviewed did not set off alarms using traditional plagiarism-detection tools. However, Catherine A. Gao and her team did report that more sophisticated AI output detectors could discriminate between real and fake abstracts.

Investors may need such a tool, whether for ChatGPT or other emerging technologies: which is a real and which is a fake investment opportunity?

For the rest of us, it seems telling the difference between fake and real greatness, between fake and real value, will require some real intelligence.

# # #

Text generated by ChatGPT partially fills a wait screen. (Image: Screenshot of OpenAI ChatGPT)

-30-