Think your smartphone screen is too small? Well, raise your arm – or paint a wall!

Those are a couple of the new ways we can interact with our computers.

Newly developed paint that turns any wall into a giant interactive touchscreen has rolled out at a Canadian technology showcase, for example, triggering not only awesome gaming opportunities but a whole new way to look at smart homes and offices.

Thousands of the world’s top technologists, product designers and research scientists gathered in Montréal recently to show – often for the first time in public – new tech gadgets and potential consumer products coming out of the expanding human-computer interaction space.

How we interact with computers has long been an area of important study, obviously, but the field has grown to include any point that we use to contact technology, from the keyboard to the touchscreen to biometric interfaces and more. Wherever human-computer interaction takes place, researchers and product specialists want to determine just how efficient or economical that contact is. They want to see how well the many physical, virtual, multi-touch or 3-D interfaces we use to get the product to do what it does will actually work.

So presenters from companies like Google, Facebook and Spotify joined scholars from universities including Stanford, MIT, Harvard, Carnegie Mellon and the University of Waterloo at this year’s ACM CHI Conference on Human Factors in Computing Systems, or CHI 2018.

The event is known for the unveiling of many different technologies prior to market deployment: eventually, such ideas are incorporated into more familiar products or services like social networks, instant text messaging, personal health and elder care, fitness tracking, smart homes, the Internet of Things and wearable devices.

And interactive paint.

Often the largest available surface in any room in the house, walls are not used for much beyond holding up pictures, mounted TVs or the ceiling above your head.

But with a bit of high-tech surface coating and embedded electronics, the blank wall can be a smart surface, connecting people and products through gesture and touch.

Yang Zhang, a PhD student in a human-computer interaction, presented a research paper at CHI 2018 outlining the concepts behind Wall++, a surfacing agent with sensing capabilities.

Researchers at CMU and Disney Research used simple tools and techniques to transform dumb walls into smart ones. (Credit: Carnegie Mellon/Disney)

Zhang and a research team from the Human-Computer Interaction Institute at Carnegie Mellon University and Disney Research showed how they can turn dumb walls into smart ones with not much more than a paint roller. Their products is estimated to cost about $20 per square metre of coverage.

The conductive Wall++ paint creates small electrodes on the wall, letting the surface sense or track a user’s direct touch and even a nearby body gesture. Treated walls also act as electromagnetic sensors that can monitor, respond and send signals to electrical devices and smart appliances. The wall could sense, for example, if the room is too bright and automatically dim the lights in response. It could tell that the water has boiled, and so turn off the stove-top kettle.

Of course, walls surround us.

Not just at home, but in the office, at school or university, in a hospital, museum or shopping mall. That seems to open up as many possible uses for room-size interfaces and context-aware applications as it does colour swatches and interior design options.

Sometimes, if we’re lucky, there are no walls between us. So if you’re looking for another kind of touch-sensitive surface, raise your arm!

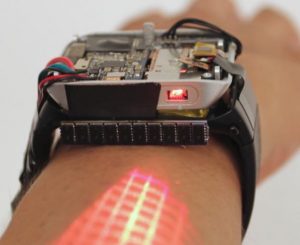

Another team of researchers (also from Carnegie Mellon) unveiled at CHI 2018 a display concept which uses the user’s skin to expand the display surface of a typical smartwatch.

Our own skins provides a natural, immediate and ‘always on’ surface for digital projection, says researchers from Carnegie Mellon University who presented at CHI 2018. © 2018 Association for Computing Machinery.

They call it LumiWatch, and it uses a tiny embedded pico-projector to deliver a projected pattern or displayed image, and a depth sensing visual array to determine a user’s finger position when interacting with the display.

Imagine a highly calibrated little keyboard projected on your arm, and a super-accurate depth and position sensor watching any interaction with the projected interface; simple swipe commands could unlock the keyboard, and finger touches to the projected interface image can provide input commands to the smartwatch and beyond to other connected devices.

The LumiWatch incorporates Bluetooth 4 and Wi-Fi on chip for its communication needs; it has a 1.2 GHz quad-core CPU and 4 GB flash memory running on Android 5.1 and powered by a 740m Ah, 3.8V lithium-ion battery incorporated in the casing.

Somewhere in between the projected power of a loaded smartwatch and the visual scale of a wall sized touchscreen is the cross-device opportunity envisioned by a tech team playing with a conceptual framework dubbed David and Goliath.

A new way to visual data is visualized by combining the power of smartwatches with large projection screens. Image: University of Maryland

They’ve developed a workflow and interplay between the two devices so that analysts or researchers could, for example extract or download data from tables and charts shown on a large display to their smartwatches and then manipulate the data on other visualizations by physical movements, direct touch or remote interaction.

The development team sees the possibilities for these kinds of pull//push/preview interactions as valuable in many visualization tasks, bringing a look-see here metaphor to the big reams of collected data gathered by social media networks, by vehicles in motion, by people at work or play.

Of course, if we humans are looking to take advantage of our own attributes in order to get the most from our digital devices, we might look to our fingers – even all ten of them!

Many gadgets and devices these days can be pretty much fully controlled with one or two fingers – swiping and tapping is mostly an index finger function.

But what if, for example, smartphone manufacturers started using more of the device to add some new human computer interface options – like a button or two on the back.

Research into BoD (back of device) interaction and one-handed controls beyond the touchscreen is underway at the University of Stuttgart in Germany, where researchers used a sophisticated motion capture camera system to analyze the most ways people can, do or could control their devices (thinking about both the increase in size of many of devices, and the range of children’s and adult hand sizes that need to hold and use them), comfortably, conveniently and effectively.

Participant exploring the comfortable area of the thumb on a Nexus 6 in front of an OptiTrack motion capture system at the University of Stuttgart.

The investigators proposed some options for device design and some additional places to put input controls that would not make the user have to change his or her grip or lose grip stability and possibly drop the thing.

Key findings showed that BoD interaction held great promise for a more physical, if not more humane way, to interact with our computers.

CHI 2019, the next Conference on Human Factors in Computing Systems, will be held in Glascow. The theme will be Weaving the Threads of CHI.

-30-