By Gadjo Cardenas Sevilla

By Gadjo Cardenas Sevilla

The principles of photography have remained the same for over 100 years. A camera focuses on a subject through a lens, once the subject is clear, we make sure exposure is correct to light the subject and an imprint of that moment in time is taken and recorded. The result is a photo.

During the age of analog cameras and film, it usually took dozens of tries to get the perfect photo. This resulted in a lot of wasted prints and discarded film. In the age of digital cameras and, more importantly, smartphones, we see artificial intelligence and machine learning dramatically change the quality of photos.

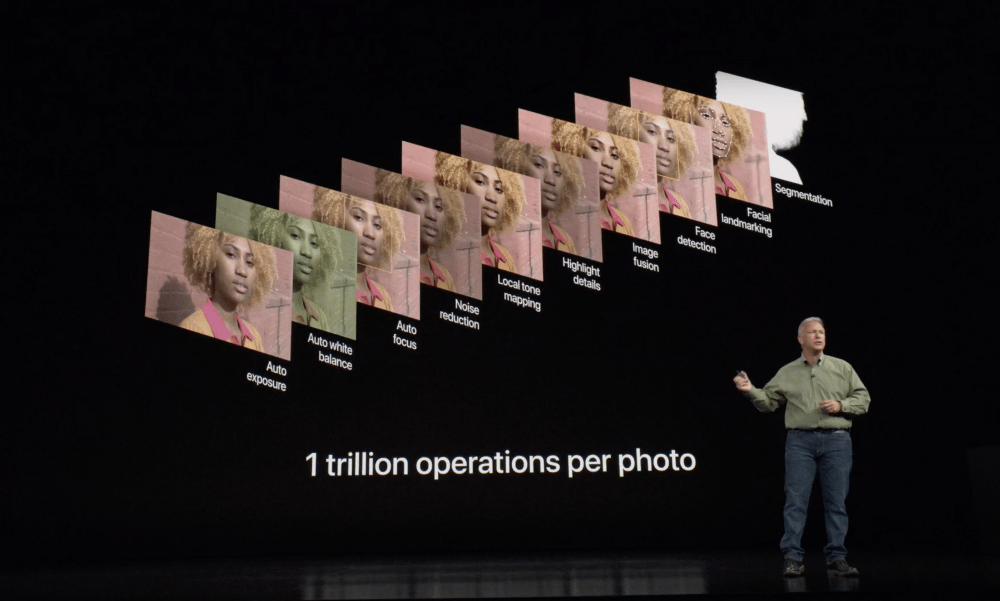

Smartphones are handheld computers. They have multi-core processors and are connected to the Internet and the cloud. Limited by their slim sizes and small sensors, smartphones use computational photography and artificial intelligence to ensure the best photos are taken each time. Machine learning, which can memorize settings, adjust lighting and exposure as well as focus on human or animal faces that gets smarter the more its used.

The speed and computational power of today’s smartphones are staggering. These devices are already recording even before the shutter is pressed and while traditional cameras take one photo at a time, smartphones can take multiple shots.

To ensure you get the best photo each time, an iPhone or Android device will shoot a series of photos and likely merge them into one near perfect picture. The Google Pixel goes a step further and takes over and underexposed frames to add more definition (this is often called HDR+ or high dynamic range).

![]()

You can think of your smartphone as a genius photographer that can assess lighting, focus on the subject, separate the foreground from the background and take the perfect photo or even a series of photos all in a fraction of a second.

Apple says the iPhone XS goes through one trillion operations per photo. Google’s Pixel 3 pushes all this further. It uses HDR+ burst photography to buffer up to 15 images and then employs super-resolution techniques to increase the resolution.

These smartphones also compute for hand movement and shake, they have motors in their lenses that counteract vibrations, and they aim for tack-sharp images even without a tripod. Computational photography virtually eliminates all the hindrances to getting a good picture that traditional photography has long struggled with.

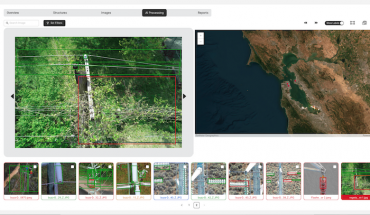

Google’s Night Sight feature is a groundbreaking low-light shooting mode and its a result of pure computational photography. The feature takes multiple shots and merges them but also employs AI to discern objects while reducing graininess and blur. It is a feature best experienced first-hand and completely changes the game in terms of low-light shots.

Computational photography is a great solution. Using AI and machine learning to handle the minutiae and nuance of taking great photos allows users to simply focus on enjoying the moment without fussing over the controls.

Computational photography also introduces some questions. Are you taking a photo or creating a composite from a range of photos? Some smartphones famously add their own processing to smooth out the pixels and make images more presentable. The result is synthetic-looking skin and pigment on people and an almost plastic quality to portraits.

Photo shot in a dark scene without Google’s Night Sight mode

Photo of the same scene but with Night Light mode

This is so vastly different from film and analog photography which give of a warm and realistic look (when properly exposed and focused), even the grain of the film resonates a more lifelike capture which no digital or computational camera can quite replicate.

This becomes a bigger issue when we’re looking at authenticity. If some iconic photos lose their magic when you find out they were posed (see Robert Doisneau’s Le Baiser de l’Hôtel de Ville) is one of the most spontaneously romantic photos ever taken, but it wasn’s snapped amid a busy Parisian street. The photographer commissioned friends to flirt and frolic before snapping the shot.

Computational photographs are made up of more than one photo, they are altered on a microscopic level and edited in smartphone even before you first see them on the screen. Some might say all smartphone photos are doctored to a certain extent and none of them are completely honest representations of reality, but they sure look amazing.