Much of today’s technology is designed to deceive and manipulate users, not to protect their privacy.

In our smartphones and our social media sharing sites and our online business networks, product designers are using certain settings, colours, patterns, techniques and methods to get us to use their valuable products more, while protecting our valuable assets less.

Several books and reports look at how and why technology is designed the way it is, and the picture they paint is not pretty: rather than having a consistent and overarching concern for user privacy and the protection of personal information, many companies have as a corporate imperative the need to get users to engage more, to disclose more, to click more.

The tools they have to influence our use of their technology include buttons and links, timing of alerts and warnings, the shape of screens, the colour of dashboards and more. Their careful and strategic use of language leads us to follow links, fill out surveys, respond to suggestions and otherwise share information in ways that are good for the company, but not necessarily good for us.

Deceived By Design

In a report called Deceived By Design, the Norwegian Consumer Council says that tech giants (like Facebook, Google and Microsoft) use design to “nudge users away from privacy-friendly choices.”

It sounds gentle, almost benevolent, that “nudge”.

But it is a powerful behavioural modification concept, so in the 44-page NCC report, the language gets stronger and darker. The authors in fact cite several dark patterns they have detected in the deception, such as privacy-intrusive default settings and misleading words used in a design that gives users “an illusion of control” while hiding privacy-friendly choices and making sure that choosing a privacy-friendly option requires more effort for the user.

The report also says that tech design often moves from a gentle nudge to a more direct threat: if users choose not to accept some privacy policies, they are warned about less relevant content, a loss of functionality or even the deletion of the user’s account.

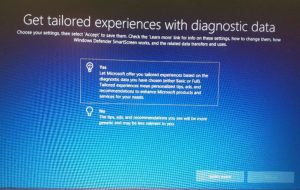

According to the NCC report, “When asking users to choose whether Microsoft can allow apps to use the users’ Advertising ID to personalize ads, users were only told that denying this permission would result in less relevant ads. Additionally, every setting in the process was framed as a statement, such as “Improve inking and typing recognition” and “Get tailored experiences with diagnostic data”. Allowing data sharing was always framed as a positive “Yes”, while restricting sharing and collection was a negative “No”.

But does this deception or manipulation constitute a blatant effort to trick people? Are these examples of evil intent or malicious desire? Is it carelessness and an overall lack of concern?

In most cases, no. The corporation’s business model is built on user activity, engagement, sharing and disclosure. They operate under a corporate mandate to gain value, which today often means collect data. Even in the process of seeming to protect data, the corporate mandate to collect data remains in place.

Social media companies, for example, which make money on user data via advertisers, “have every incentive to use the power they have with designers to engineer your almost near-constant disclosure of information. We will be worn down by design; our consent is preordained,” says Woodrow Hartzog.

In his new book, Privacy’s Blueprint: The Battle to Control the Design of New Technologies, Hartzog argues that technologies are designed at a deeply engineered level to undermine user privacy, and as such, design needs to be subjected to improved standards, even regulations and laws.

“Design is everywhere; design is power and design is political,” he writes.

Author Woodrow Hartzog discusses privacy implications caused by the design of technology in an information session hosted by Terrell McSweeny, former Commissioner of the Federal Trade Commission (FTC). Twitter image.

Hartzog, who’s on the faculty of the Northeastern University School of Law, often speaks and writes about how the ability to design a product that people use is equivalent to power over them. “Design channels behaviour,” he underscores, and a certain design will make certain outcomes more or less likely.

Addicted By Design

He describes a tech-maker’s approach that’s very similar to the ones used by Vegas slot machine manufacturers, as described by New York University professor Natasha Dow Shull in her book, Addiction by Design.

The bridge between technology design and addiction, she says, is something called a variable reward. Pull the arm on a slot machine, and you may be an instant winner. You may not. But that is the enticement of a variable reward. It’s often worth trying again and again to get it, particularly if the risk seems minor. And through machine algorithms and interface designs, potential worth and reduced risk is reinforced again and again. The reward may be a cash payout or it may be a thumbs-up like on your most recent post or food photo.

From the slot machine to the smartphone, the idea remains the same.

“The design of the phones is where the dark side starts, but not where it ends,” says tech advisor and commentator Roger McNamee. “The design of platforms and the way they exploit the addictive properties of smartphones creates another set of issues.”

McNamee, along mobile UI designer Tristan Harris, are founders at the Center for Humane Technology, where calls for more ethical design are often made and uses of deceptive or addictive technology are often sited. Harris is the author of Time Well Spent, and an advocate for ethics in technology design.

LinkedIn, they say, is an obvious offender when it comes to deceptive or manipulative design. The business-oriented network wants as many people using it as possible, of course, so it incorporates calls for users to accept connections that are recommended by the system. It encourages users to respond to messages that can be generated by the system and to share endorsements that are then promoted by the system.

LinkedIn, like Facebook, can create the illusion that other users are making inquiries, initiating outreach, responding to obligations, but in many cases, it is the system itself that generates the triggers that users respond to. Of course, Harris and McNamee point out, the system profits from the time users spend responding.

It’s the “architecture of social media” that encourages users to constantly connect and always share.

And it’s their business.

But what is their politics?

That’s the disconcerting question posed by Langdon Winner is his book, Do Artifacts Have Politics?

Winner asked the question long before the arrival of smartphone and social media networks, but its relevance may never have been greater.

“[T]he invention, design, or arrangement of a specific technical device or system becomes a way of settling an issue in the affairs of a particular community,” he wrote decades ago! The ability of technology to influence or settle the affairs of the community make it an inherently political technology, he asserted long before Facebook, Gab or Ontario Proud became examples.

Designed by Choice

Consumers and technology users are often assuaged by the fact that so many options and choices exist on the market that people’s judgement and market demands will win out over privacy threats. “I can go to another product or platform if need be” “There are plenty of settings I can use to protect myself” “They always ask if I want to proceed, so I am protected”.

But is user choice the same as user privacy?

No, says Barry Schwartz. Too much choice in fact exploits our weaknesses by wearing us down with endless options and an unlimited number of consent or permission requests. In his book, The Paradox of Choice, Schwartz says the number of choices available to a technology user can contribute to anxiety, stress, even bad decisions.

He understands how hard it is for users “to grasp the meaning of thousands of possible data disclosures” contained in even basic user agreements or user manuals, much less how those data disclosures may be used against a user.

Too many choices contribute to the wearing down of the user, who often just clicks “Accept” or “I agree” just to get on with it! But if consent is preordained (through intentional design, created desire or threatened loss of service) then privacy is violated just the same.

So that big eye-catching red button, that oh-so-cute chat bot, that complicated consent form, that option for a free personality profile, that user agreement with the triple negative sentence, they are not there by accident.

They are there by design.

And the design is there for a purpose.

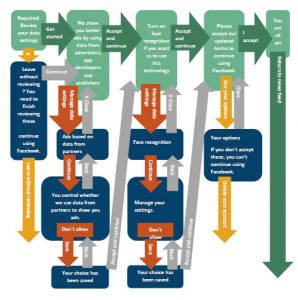

By giving users an overwhelming amount of granular choices to micromanage, Facebook has designed a privacy dashboard that, according to NCC analysis, actually discourages users from changing or taking control of the settings.

-30-

Clay Chandler of the Fortune Data Sheet e-newsletter has an interesting take-away from a day spent at an off-the-record design retreat in California, hosted by IBM Design team leaders.

No specifics, but his 30,000 ft. view is interesting and alarming in view of what’s happened already, and what may be next:

“There’s an increasingly urgent debate among designers about whether they’re aiding and abetting business models that manipulate users into surrendering personal data, buying stuff they don’t need, and engaging in socially destructive behaviors. Though the ‘Target effect’ (which nudges shoppers to over-consume), the addictiveness of video games like Fortnite, the hijacking of social media platforms like Facebook, and other ills are often described as ‘tech problems,’ they can also be construed as epic design fails. Designers fear advances in AI, Big Data and 5G may only make things worse.”