A rapidly growing collection of literature is shining a light on the use of dark patterns, the tricks and techniques that hardware and software designers use to get us to do things, use things, click on things we might ordinarily not.

In our smartphones and our social media sites and our online business networks, product designers are using certain words, settings, colours, patterns, techniques and methods to get us to stay on their site longer, to use their products more. One of the problems is that protects our valuable assets less.

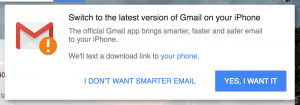

Confirmshaming tries to make the user opt in to something using a guilt trip. The option to decline is worded in such a way as to shame the user into compliance.

Several books and reports look at how and why technology is designed the way it is, and the picture they paint is not pretty: rather than having a consistent and overarching concern for user privacy and the protection of personal information, many companies have as a corporate imperative the need to get us to engage more, to disclose more, to buy more.

As just one example I encounter often: LinkedIn, like Facebook, creates the illusion that other users are making inquiries about me, initiating outreach, responding to previous posts. But in many cases, it is the system itself that generates these triggers, not another user. The system encourages users to respond to messages that are generated by the system and to share endorsements that are then promoted by the system. Of course, the system profits from the time users spend responding.

It’s the “architecture of social media” that encourages users to constantly connect and always share.

The tools available to influence our use of their technology include buttons and links, timing of alerts and warnings, the shape of screens, the colour of dashboards and more. Their careful and strategic use of language leads us to follow links, fill out surveys, respond to suggestions and otherwise share information in ways that are good for the company, but not necessarily good for us.

Dark patterns can appear almost anywhere in user interface design, or as it is sometimes called, “choice architecture”. Here, special sales messages imply you’d better hurry, as there are only two of your favourite item left to buy.

As concerning as the trend is today, the term “dark patterns” was first used by a London, UK-based interface designer and cognitive scientist Harry Brignull in 2010.

He defined the dark pattern as “a user interface that has been carefully crafted to trick users into doing things, such as buying insurance with their purchase or signing up for recurring bills.”

He launched a website at the time, darkpatterns.org (now called Deceptive.Design), where he gives examples and descriptions of the deceptive techniques and dark intentions embedded in some product designs.

“When you use the web, you don’t read every word on every page — you skim read and make assumptions,” Brignell describes. “If a company wants to trick you into doing something, they can take advantage of it by making a page look like it is saying one thing when in fact it is saying another.

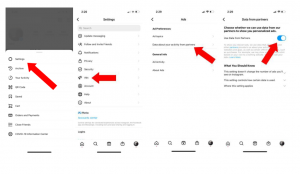

Consumers get additional help to defend themselves against dark patterns – and control access to their private information − from a new tips guide from the Education Fund of the U.S. PIRG Education Fund – PIRG stands for Public Interest Research Group, and there are such groups through the U.S. and Canada, all working as direct advocates on issues such as consumer protection, public health and transportation.

The group’s tips guide also points out what companies can do with the data collected through their use of dark patterns.

“Beyond the information that we post online, apps left in their default settings collect data about our daily lives − where we are, who our friends are and what we do online,” said Isabel Brown, U.S. PIRG Education Fund’s Consumer Watchdog associate. “Companies then gather and − yes − buy and sell our data. We become commodities.

“Because the data collection and transactions happen behind the scenes, many consumers don’t know that’s happening. And once someone has your information, they may try to manipulate you into buying something or doing something you don’t mean to.

A new tips guide from the Education Fund of the U.S. Public Interest Research Group provides step-by-step instructions for safely setting up online accounts.

“Companies use the information they collect to send us targeted ads. Sometimes this can be helpful, but other times it’s downright creepy,” said Brown. “But consumers have the power to decide how their data is used, if at all.”

While dark patterns are often used to boost sales and marketing efforts online, darker motivations are also at play.

Artificial intelligence expert Guillaume Chaslot, who once worked to develop YouTube’s recommendation engine, has spoken and written to explain how the same kind of recommendations and priorities can churn up not only similar movies, but similar outrage, conspiracy theories and extremism. Guillaume describes the development and use of those hidden patterns on his website, AlgoTransparency.org, which tracks and publicizes YouTube recommendations for controversial content channels.

In Europe, the Data Protection Board released its guidance about dark patterns usec on social media platforms and their potential regulation infringements. The board offered examples and best practices for addressing dark patterns, but noted the offerings are “not exhaustive.” The guidelines outline principles for transparency, accountability and data protection by design as well as GDPR provisions that can help dark pattern assessments. There’s also a checklist for identifying particular dark patterns.

No matter the motivations, design tactics and dark patterns trick people into doing all kinds of things they don’t mean to, from signing up for a mailing list to submitting to recurring billing to joining a radical political group.

So many kinds of things, in fact, that the Electronic Frontier Foundation has put in place a project so members of the public can submit examples of deceptive design patterns they see in the technology products and services they use.

“[S]ubmissions to the Dark Patterns Tip Line will help provide a clearer picture of peoples’ struggles with deceptive interfaces. We hope to collect and document harms from dark patterns and demonstrate the ways companies are trying to manipulate all of us with their apps and websites,” said EFF Designer Shirin Mori. “Then we can offer people tips to spot dark patterns and fight back.”

# # #

A decade ago, dark patterns were described as “a user interface that has been carefully crafted to trick users into doing things…”. Those patterns have evolved into today’s deceptive design of hardware products and software services.

-30-