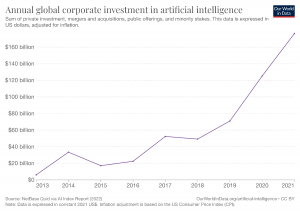

More than 1,300 companies in Canada are involved in developing and delivering artificial intelligence products and services. More than 2,000 investors have poured several billion dollars into the sector, according to the business intelligence firm CrunchBase, and much more is anticipated.

By harnessing the power of AI, large Canadian firms including, but not limited to, Kinaxis, VIQ Solutions, and ODAIA, as well as small business and tech start-up companies like CoverQuick and Paragraph AI, are making inroads for themselves in an already crowded and competitive field.

Also a controversial one: key industry players, including those directly involved in developing AI tools, are calling for a reassessment, a development freeze or an outright abandonment of the technology.

In a letter of support for proposed new Canadian legislation covering AI, dozens of Canadian tech industry participants say AI systems should only be developed and deployed in a way that first identifies, assesses, and mitigates the risks of harm and bias. Canadian Geoffrey Hinton, the so-called godfather of AI and now a former Google employee, has also expressed his concerns and warnings.

More than 30,000 tech luminairies said in an open letter organized by the non-profit Future of Life that all AI labs should immediately pause the training of AI systems more powerful than GPT-4 for at least six months. ChatGPT-maker and OpenAI CEO Sam Altman recently appeared before a U.S. senate subcommittee to share – in some ways restate – his concerns and warnings, too. (The Canadian Privacy Commissioner has started his own investigation into AI usage.)

Well in advance of the realization of some dire predictions about the end of the world due to AI, tools built using it are empowering people to realize they can communicate in ways they could not previously. Using ChatGPT-like capabilities to generate written text, for example, for job applications.

For anyone uncomfortable writing business or professional correspondence in English, recent immigrants to Canada, for example, folks with dyslexia or other sensory challenges, AI tools that can generate words, sentences, paragraphs and entire documents quickly and easily are not life threatening, but sometimes saving.

The Canadian ChatGPT-based CoverQuick program helps generate professional job applications, resumes and text related to job search, and it’s a big help to many students and non-native English speakers seeking employment in Canada.

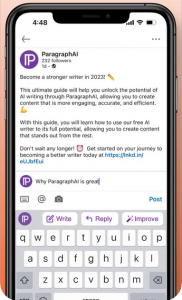

ParagraphAI is a Canadian multilingual AI writing assistant program, also powered by OpenAI’s ChatGPT.

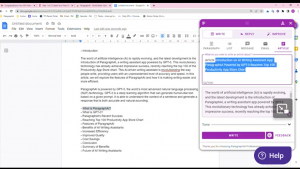

ParagraphAI is a Canadian multilingual AI writing assistant program, also powered by OpenAI’s ChatGPT. It can be used to instantly draft text, respond to messages and emails, and ensure correct grammar and tone while, the developers say, improving productivity and writing quality.

By inputting and describing what topic or subject is to be covered, ParagraphAI can be used to write articles, essays, emails or other text messages which can then be fine-tuned for vocabulary, tone and voice, using virtual keyboard entry or a handy slider bar. Multilingual live chat and website translation features are also. The basic service is free (with output limits) but various paid levels of service and productivity are available.

Pitched to organizations, students and professionals, the ParagraphAI team is confident about its abilities to the point of urging comparisons with many of the other text generation tools on the market, including Grammarly, Wordtune, Copy.AI, Anyword or ChatGPT itself.

ParagraphAI can be used to write articles, essays, emails or other text messages which can be fine-tuned for vocabulary, tone and voice using virtual keyboard entry or a handy slider bar.

Shail Silver, the company’s co-founder and Executive Chair, described the software’s speed and efficiency in a release, noting that: “On average, it takes around 50 minutes to handwrite 1,000 words. ParagraphAI can write 1,000 original words in seconds.” Using AI, he added, “a writer becomes more like an editor, reviewing the AI-generated text and fine-tuning the message.”

Another part of the editor’s job, ParagraphAI says, is to make plagiarism and fact checks once the text has been generated.

Not part of the company’s instructions, but rather part of research study findings about generative AI text, is that it may need to be checked for bias and pre-determined opinion, too.

In a paper titled Co-Writing with Opinionated Language Models Affects Users’ Views, Cornell University researcher Maurice Jakesch investigated whether AI and language-model-powered writing assistants generate some opinions more often than others, impacting what users write — and what they think.

In an online experiment, his research team asked participants to write a post discussing whether social media is good for society. Using an opinionated language model affected the opinions expressed in participants’ writing and shifted their opinions.

When repeating the experiment with a different topic, the research team again saw that participants were swayed by the writing assistants. Jakesch and the team are now investigating the effects mechanisms and how long it lasts.

Might not be for long, if another development team, this one behind a program called GPTZero, has anything to do with it. Their product detects and identifies GPT-created text and the AI detection tool The U.S. start-up company has developed tools to look for socially impactful inherent biases and pre-existing assumptions, as well as grammatical variation and text randomness, among other characteristics.

While no detector is perfect, the GPTZero team says it wants to provide everyone with needed tools to detect and safely adopt AI technologies.

The revolutionary impact of generative AI tools such as these and many more is clear; clear too is the need for guidelines, oversight, regulation and legislation that steer those tools towards productivity and efficiency, not distinct and potential threat.

# # #

ChatGPT is a generative AI-based technology that interacts with users in a seemingly human way. It was built upon very large language models and huge vocabulary data sets; both human moderated and machine learning techniques are incorporated into its development.

-30-