We’re all used to reading product tech specs about processor speeds, screen resolutions and bandwidth capabilities. But what if our favourite gadget or online service came with a sheet that specified the good outcomes the product will bring? Or the moral reckonings made by the developer and manufacturer before they released their product?

Shouldn’t tech specs detail the ethical obligations a product or service provider has to the consumer, to society, to the planet?

Shouldn’t we all be screaming “Yes!” about now?

Some folks already are. Tech industry initiatives like Tech for Good, the Center for Humane Technology, Truth About Tech and others are tackling the perceived inequities, negative impacts and outright threats posed by technology today. More and more people are seeking ways to bring accountability, if not regulation, to the industry that employs, enables, inspires and sometimes frightens them.

The Canadian initiative called Tech for Good calls for technology to be used and developed with a greater sense of social benefit for all.

Members of the Canadian tech community, for example, recently declared that a set of guiding principles should be followed while creating, delivering or making use of technology.

“[I]nnovating for good needs to be more firmly and deeply fixed among all Canadian industries, companies and organizations. Our challenge … is to create a culture in which everyone assumes the obligation to innovate for good,” said the Right Honourable David Johnston, former Governor General of Canada, and the founding Chair of the Rideau Hall Foundation (RHF), a Canadian charitable organization.

He spoke at the inaugural True North conference, staged in the high-tech Waterloo region of southern Ontario. Communitech, the innovation hub and tech incubator there, along with RHF, Deloitte, Ipsos Canada, the University of Waterloo and numerous other Canadian tech industry participants joined the conference to unveil the Tech for Good Declaration.

It’s described as “a living document for anyone to provide feedback and commentary on” – so it is a voluntary declaration that tech should be used as a force for good.

They’ve got their work cut out for them. Sadly, tech for bad has been doing good business.

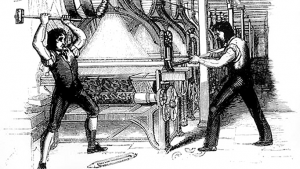

Often seen as techno-phobes, Luddites actually fought against tech makers who used machines in what they called “a fraudulent and deceitful manner” to get around standard labour practices.

Fake news, fake elections and propaganda bots. Fake audios and videos. Unwarranted tracking and unwanted surveillance. The theft, misuse or exploitation of personal data. Manipulation, compulsion and tech addiction. Exploding devices, faulty software, failing hardware. Labour regulation, social disruption and economic displacement. Bullying, cyber-stalking and PlaneBae’ing. Threats from AI, machine learning and the Internet of Things. The need for more diversity and equity in the tech workplace. The digital age triggers a new gilded age, driving economic inequality and empowering a new generation of concentrated wealth.

In response, Canadians wrote a tech declaration that speaks as much about the human element as a technological one. It says “There must be a manual override” that allows human mediation and intervention, especially with so-called smart devices that make decisions for us. Technology should not lead to greater inequality, the authors declare, and its impact on social cohesion must be considered.

How’s that for a tech spec? Degree of social cohesion.

What about consent? Can we get really specific here?

It’s complicated concept for sure, both in terms of our willingness to agree to tech privacy policies and terms of use, as well as our ability to truly understand those policies in the first place.

The Canadian Tech for Good declaration says the use of technology (and of the data it can generate) must be transparent to the user/subscriber, and that we as consumers/citizens must be able to make an informed choice about our use of or involvement with technology.

In many ways, that’s what the law says – or soon will say. New rules and regulations about informed and explicit consent, and the need to make core technology services available even when consent is withheld, are designed to empower and protect the user.

Even more government regulation is needed to keep tech good it seems, if only because tech is becoming even more capable all the time.

Facial recognition is just one case in point. The hardware and software involved in recognizing a human face and matching it to some existing set of expectations is powerful and potentially perilous.

That’s why Microsoft President Brad Smith has called for government regulation of facial recognition technology. He says we need to take a principled approach in the development and application of facial recognition technology and that we should recognize the importance of going more slowly when it comes to deployment.

Smith added that the need for government leadership does not absolve technology companies of their own ethical responsibilities, but just how that absolution will occur is not as clearly stated.

Tristan Harris from the Center for Humane Technology speaks above addictive technology at various industry events. He says tech developers make use of well-known psychological principles to get consumers to use their products more often. CHT Twitter image.

Perhaps the folks at the Center for Humane Technology have some suggestions. They’re former tech leaders, like founder Tristan Harris, who seek a more ethical underpinning for the industry, one that is designed in from the start. A former Google engineer, Harris is very familiar with something called persuasive technology design, and how tech developers make use of well-known human psychological and physiological principles to get consumers to use their products more often, and for longer periods of time.

Another founding advisor at CHT, Roger McNamee, speaks to not only the “addictive properties of smartphones” but the “cavalier attitude of some phone makers” and the “manipulative business practices” followed by some platform operators.

So, just this week, CHT and a leading U.S. kids’ advocacy group called Common Sense launched a campaign to protect young people from the potential of digital manipulation and addiction. Called Truth About Tech, organizers want to put pressure on the tech industry to make its products less intrusive and less addictive.

The campaign, Truth About Tech, will put pressure on the tech industry to make its products less intrusive and less addictive. The campaign says tech companies are in an “arms race for consumer attention” by making aspects of their technology very addictive. (PRNewsfoto/Common Sense)

SA (smartphone addiction) is a real problem for sure, but its capacity for good or bad pales in comparison to AI, artificial intelligence.

In an open letter, hundreds of tech experts discuss both the great benefits and ethical challenges posed by artificial intelligence. They speak of technological potential and pitfalls in a context with much greater significance than many previous technological developments.

A ‘techlash’? A coalition of tech workers is protesting what it sees as unethical activities by leading technology companies. Apple, Amazon, Alphabet, Microsoft, and Facebook — in that order — are the five largest publicly traded companies in the world. Their sales may be doing well, but that does not mean they are doing good. CTW Twitter image.

Meanwhile, tech workers from leading companies like Amazon, Google, Microsoft and Salesforce are writing letters too, asking their CEOs to sever relationships with certain government operations, including the U.S. Immigration and Customs Enforcement agency and the Department of Defense.

Interestingly, there are historical precedents for refusing to work with a government on a technology: some scientists who worked on the atomic bomb project were horrified to see what it had wrought, including Robert Oppenheimer, known as the father of the atomic bomb.

That was one of the points made by Canadian journalist and commentator Diane Francis at the recent ideacity conference in Toronto. Speaking as part of a digital debate about the promise or peril of technology, Francis cited Oppenheimer and his calls for a moral and ethical framework around technologies as an example worth following. “Ethical frameworks are going to be the only way forward,” she said.

Although that event is over, the debate over tech good vs. bad continues (will it ever end?). Calls for a more ethical technological environment continue, too, and that is a good thing.

-30-