Not enough can be said about the potential dangers of ubiquitous surveillance systems today, be they deployed at home, at work, in school, or in the streets.

Surveillance systems can gather data from multiple sources, including cameras, microphones, computers and computer keyboards, mobile devices, smart devices and many other industrial and consumer products.

Captured surveillance system data are often analyzed and interpreted by learned machines and artificially intelligent platforms driven by algorithms and code.

All those gathered data points are analyzed and interpreted by learned machines and artificially intelligent platforms driven by proprietary algorithms and code, written by humans with specific goals in mind.

But a growing movement to counteract the efficacy of surveillance systems and reduce any negative impacts they may have on society is also being written by humans.

But with some opposite goals in mind.

Artists, technologists, designers and engineers are developing new tools, techniques and platforms to creatively attack or undermine today’s surveillance infrastructure.

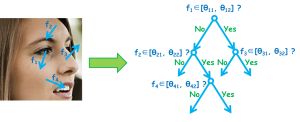

They propose to overwhelm and confuse surveillance systems and facial recognition software using interesting approaches that are part of a movement known as surveillance exclusion, or adversarial attacks.

Stop at Surveillance. Or Don’t Stop.

AI researchers discovered they could use stickers on a stop sign and thus make the sign unrecognizable to computer vision algorithms. Image source: arxiv.org.

One of the easier demonstrations of how adversarial attacks can overwhelm a visual surveillance system comes at the stop sign. Researchers discovered they could affix small black and white stickers to a stop sign and thus make the sign unrecognizable to computer vision algorithms.

By modifying an expected image (one a surveillance system has been trained to recognize using those algorithms written by humans), surveillance exclusion advocates can ‘trick’ that system into thinking it is seeing something that doesn’t match what it has been algorithmically trained to identify.

Likewise, researchers at Carnegie Mellon University discovered that by donning special – and sometimes goofy-looking – glasses, they could fool facial recognition algorithms to mistake them for celebrities!

Such visual transformations are advocated by organizations campaigning against the use of facial recognition and biometric technologies, whether those technologies are used by public security authorities or private for-profit companies.

To be really safe, hack your face, some organizations recommend. Maybe your children’s faces too.

Face the Facts: Facial Recognition is Everywhere

The desire to hack the face by confusing facial recognition software with extraneous patterns or colours or shapes is well-represented in the work of anti-surveillance pioneer Adam Harvey. Based in Germany, he has developed tools that can obscure or camouflage the human face from surveillance systems.

Hyperface was one such project, although the technology is not for sale. Harvey also developed an open-source project called CV Dazzle as a way to encourage the use of flamboyant make-up and crazy hairstyles to create an unrecognizable “anti-face”.

CV Dazzle introduced low-cost methods for breaking computer vision algorithms by working against face detectors and computer vision programs that typically look for dark and light areas of a face.

In a technology and surveillance workshop that explored Harvey’s ideas and techniques, participants creatively used hair styles, make-up palettes, patterned clothes, shoes, accessories and other fashion items as a way to protect their privacy from computerized vision surveillance systems

Harvey has also come up with anti-drone hijabs and hoodies, which contain shiny fabrics to offset overhead surveillance.

Sometimes, surveillance cameras are trained to simply read license plates, not faces – but to be sure, the risk to personal privacy and data protection can be just as great. Sure enough, there are adversarial responses to what are called ALPRs, automatic license plate readers.

And the folks at Adversarial Patterns have a line of clothes and fashion accessories that reproduce, in fabric, images of modified license plates. The aesthetic patterns are intended to be pleasing to the human eye, but confusing to the camera eye, so much so that false data is entered into an ALPR system and its associated database in order to undercut its efficacy.

Clothing, Jewellery and Surveillance Disruption

The Incognito mask was developed to respond and counteract the increased use of facial recognition technology. Image source: NOMA Studio

Another trend in surveillance exclusion is dubbed “face jewellery” by its creators, such as designers Ewa Nowak and Jarosław Markowicz of NOMA Studio, where an Incognito mask was developed to respond to and counteract the increased use of facial recognition technology.

Three rather simple design elements are combined to partially conceal the wearer’s face and fully confuse surveillance cameras: two brass circles positioned below the wearer’s eyes and a rectangular shaped bar across the wearer’s forehead.

In effect, that throws a face-recognizing algorithm off its game – the typical landmarks of a face are not immediately observable – because there are “four eyes”, not two.

“Every day, hundreds of cameras are watching us, facial recognition systems are becoming more and more perfect, and the place of current speculations about the future is occupied by sophisticated and advanced technology,” said the designers in a statement about their concerns. “Cameras are able to recognize our age, mood, or sex and precisely match us to the database — the concept of disappearing in the crowd ceases to exist.”

Designer Jip van Leeuwenstein created a transparent mask with a lens shape to fool video surveillance systems. Image source: www.jipvanleeuwenstein.nl

As well, designer Jip van Leeuwenstein created a transparent mask people can easily wear, but it is shaped and formed like a glass camera lens, again, to fool surveillance systems. Machines will have trouble recognizing the face behind the mask, but human beings can still determine facial expressions and see familiar identities.

Surveillance and Copyright Violations Loom Large In My Life

But if obscuring your face, changing your clothes and putting on make-up is not your idea of thwarting surveillance, maybe you should sing a happy song.

A copyrighted happy song. And then just try and post a video of yourself singing that copyrighted tune.

Leading social media platforms and video streaming sites all have strict control over material that is protected by copyright, but is nevertheless improperly or illegally posted to their service. Automatic digital rights management algorithms search for copyright-protected material all the time; once they find it, the content is muted, blocked or taken down if improperly posted.

The fact the police may be using this unique sonic hack to thwart citizen journalists who are documenting police activity only shows how far the surveillance exclusion landscape has evolved.

Reports allege that the Beverly Hills police department has developed that strategy, or may be trying that tactic. Police apparently played pop songs off their own phones whenever a citizen activist showed up with a smartphone in video record mode. Copyright-protected songs, like In My Life by The Beatles, may have been used.

Yikes! While I am all in favour of us all developing a greater awareness of surveillance systems, and even of ways to thwart those systems when all they seem to do is thwart our civil liberties and personal privacy, I may not be ready to trade The Beatles – or the greatest song ever – for my freedom!!

# # #