Advanced artificial intelligence techniques and digital media technologies, used to recognize and measure human emotions in real-time, are key tools behind the use of what’s known as facial coding.

Because facial expressions are spontaneous and reflective, expressed by muscles that are linked directly to the brain and often controlled by both conscious and unconscious factors, facial coding and emotional recognition technologies have the power to transform industry and society through informed decision-making. If responsibly deployed.

Today, facial coding and emotional recognition strategies are widely used in marketing, communications, public relations, and elsewhere to test how well any product, service, or content can elicit an emotional response and trigger a consumer reaction.

Yet advanced platforms that now incorporate computer-based tools such as artificial intelligence, machine learning, biosensors, eye-tracking solutions, and more are in fact based on concepts developed more than one hundred and fifty years ago.

Emotional Evolution, Technological Revolution

Whether infant or adult, cat or dog, Darwin documented in the 1850s how different cultures (and species) express their feelings.

In the same year he published On the Origin of Species, Charles Darwin released research he had conducted on the Expression of the Emotions in Man and Animals.

Whether infant or adult, cat or dog, Darwin documented in the 1850s how different cultures (and species) express their feelings. More recent research confirmed his insight: facial expressions are consistent across geography and culture, and individuals from different backgrounds can interpret facial expressions by other groups very accurately.

In the early 1970s, researchers such as Carl-Herman Hjortsjö, Paul Ekman, and Wallace Friesen set about identifying those basic, universal facial expressions. Specific facial muscles and movements, they found, could be linked to very specific emotions: anger, happiness, surprise, disgust, sadness, or fear.

A guide book to their findings, called the Facial Action Coding System (FACS), was published in 1978.

It is a map of the human face, and various map coordinates represent different facial muscles, positions, and movements, each associated with an emotion that is itself a response to stimuli. FACS has almost two dozen facial motions or movements categorized as expressions of emotion.

Being able to read those cues, to follow that map, was and is seen as an actionable way to detect, quantify, and respond to someone’s real-time emotional, even non-conscious, responses.

Behavioural researchers see the face as a window into the true attitudes, reactions, preferences, and biases of their human subjects; the use of automated computer algorithms and advanced biosensing technologies allows researchers to more quickly and, they say, accurately incorporate that human feedback into interface design, ad and product testing, UX and UI research, mobile app development, gamification techniques, website development, retail planning and product placement, and post-event engagement evaluation, among other disciplines.

The computer algorithm for facial coding analyzes movement, shape, and texture in different areas of the human face. It is possible and now practical to track even the tiniest facial muscle movements and translate them into emotional expressions that convey happiness, surprise, sadness, or anger.

Those tracking and analysis activities use the cameras embedded in laptops, tablets, and mobile phones (or standalone webcams and outboard video cameras) to record the facial responses of test subjects as they are shown some kind of content. As such, facial coding can be practiced almost anywhere: a person’s home, workplace, car, a building, a streetscape, or other mediated environment.

Facial Coding in Action

As we know and as has been much reported, facial recognition technologies have been widely deployed in society, and are often – although not always – immediately recognizable in public and private settings.

EyeSee provides specialized webcam-based facial coding services and supports for online properties like Twitter feeds. EyeSee image.

Facial coding and emotional recognition technologies may not be as easily recognized, but they too are widely used by, as mentioned, major research companies for pre- and post-event testing of marketing communications, on-site and online advertising campaigns, and more.

Ipsos, the global market research and public opinion firm, is among them; its Ipsos Connect branding and communication specialization arm is using ERT to gain a deeper understanding of consumer response and understand the nature of emotional responses in building brand communications.

Working with a company called Realeyes, Ipsos uses computers, taught with AI and machine learning, to measure and understand consumers’ emotions and attention levels.

Realeyes wants the facial coding technique to be even more widely deployed (and understood): it has even released what’s called “a fun app” to demonstrate emotional recognition techniques.

Even by just visiting the company’s website, you are encouraged to help the company better its facial recognition capabilities by allowing it to use the front camera on your PC to track your expressions while perusing its website. The company assures consenting web visitors that their “identity is unknown, and the [captured] data is anonymous”.

Other companies such as EyeSee also provide specialized webcam-based facial coding services and supports.

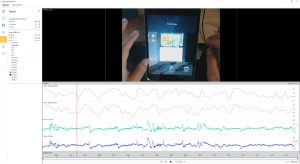

Building on those more than one hundred years of facial expression analysis knowledge, the analysis company iMotions has developed its Facial Expression Analysis Module to gain insights into live or even recorded consumer behaviour via its built-in analysis and visualization tools. Data can also be exported, the company notes, for additional analysis.

The iMotions platform for automated facial expression analysis was developed to analyze human behaviour while interacting with content, products, or services. iMotions image

The iMotions platform for automated facial expression analysis was developed in partnership with Affectiva, an MIT spin-off focused on the use of technology to analyze human behaviour. iMotions and konversionsKRAFT, a data analysis firm, work together to aggregate and analyze metrics for consumer attention, engagement, arousal/stress, and emotional valence.

Teaching Technology to be More Human

Facial coding is seen as an objective method for measuring emotions, based on the spontaneity of facial expressions and the human face’s own direct link to the brain and its sometimes mysterious workings.

But those mysteries can introduce underlying biases in any observation and analysis we undertake. AI tools often exhibit, whether intentionally or not, the underlying prejudices, preconceptions, and emotional expectations of the coders who wrote them (or of the society that produced those coders).

Yes, facial coding and emotional AI tools have the power to transform our lives, but do they have the ability to do so responsibly?

As human beings, we’ve been reading facial expressions since the moment we were born: we’ve been taking nurture from the smiling mother’s face, admonishment from a father’s frown, anxiety in a victim of pandemic lockdown, for a long time. Barring real emotional damage or physiological handicap, we all know how to read faces, and without formal training.

That cannot be said for technology. It’s got to be carefully taught.

Because emotional cues can be expressed and observed if not actually understood more quickly than most verbal communication, improved recognition of and shared respect for the sources and signs of human emotion is crucial to the survival of our social and physical environment.

Mapping the human face can reveal key emotions, attitudes, and preconceptions. iMotions image.

-30-